The term “uncanny valley” describes those human representations that are close to lifelike, but just far enough away to cause a sense of unease or revulsion in human onlookers.

“The idea is that, as physical and digital representations of humans become more human-like, there is a point at which they actually become less trustworthy in the eyes of users, and remain that way until they are developed to full or near-full levels of realism,” Graham Templeton at Inverse writes.

We trusted early artificial intelligence because we could tell it wasn’t human. As AI becomes more humanlike, we tend to trust it less — perhaps because we know, on some level, that we are being imitated by an entity that is not in fact human. The closer AI comes to imitating reality as we perceive it, however, the more likely we become to embrace it as “real” once again.

Becoming a Zebra and Other Deepfakes

In 2017, UC Berkeley researcher Jun-Yan Zhu posted a video that showed six seconds of nearly identical footage side by side. On the left, a horse trotted around a paddock. On the right, what appeared to be the same horse trotted around the same paddock, only this one was decked out in the stripes of a zebra.

Zhu and fellow researchers also published a paper on their work, titled “Unpaired Image-to-Image Translation Using Cycle-Consistent Adversarial Networks.” While their zebra wasn’t completely convincing, the technology behind it has since been used to create photos and videos that are increasingly difficult to tell from the real thing.

These convincing yet fake audio and video clips are known as “deepfakes.” While the ability to alter photos or video to show something that didn’t occur isn’t new, it used to take considerable time, talent and effort, says CNN reporter Donie O’Sullivan. Now, however, artificial intelligence has made it easier to create false images and footage, since the computer’s algorithms do the work of inserting new images over the old ones and ensuring that the two are integrated.

Some companies are taking on deepfakes deliberately. For instance, Adobe’s Sensei is an AI-based platform that enhances video, photo and audio editing tools with machine learning. Startup Lyrebird has begun synthesizing audio from voice samples.

“These projects are wildly different in origin and intent, yet they have one thing in common: They are producing artificial scenes and sounds that look stunningly close to actual footage of the physical world,” Sandra Upson writes at Wired.

Early attempts to generate realistic images with AI produced results that were at first hilarious, then uncanny. Now, these images appear increasingly real.

Which of These Fake People Is Hotter?

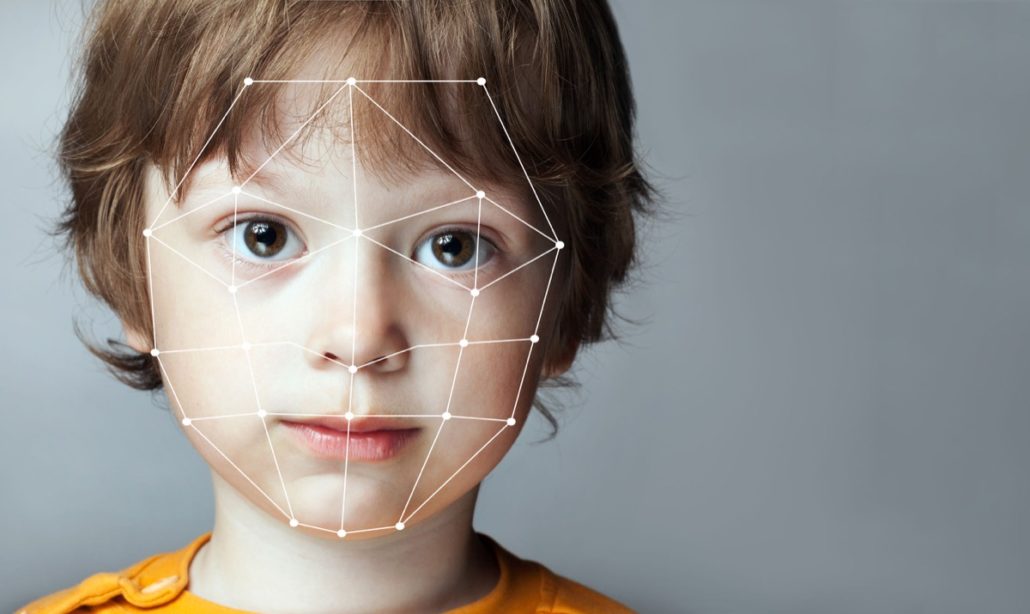

One publicly accessible deepfake project is This Person Does Not Exist. Like the Berkeley team’s horse-to-zebra images, This Person Does Not Exist uses a generative adversarial network, or GAN, to create images of people who don’t exist from a reference bank of photographs of people who do.

“While GAN images became more realistic over time, one of their main challenges is controlling their output, i.e. changing specific features such as pose, face shape and hair style in the image of a face,” says Rani Horev, cofounder of LyrnAI.

This Person Does Not Exist learns from one set of data: Images of real human faces. Judge Fake People learns from a second set: Images of generated human faces, plus visitors’ reactions to them when compared side by side.

Judge Fake People is the work of Mike Solomon, who downloaded about 2,000 of the generated facial images and inserted them into a voting system. “Like many internet addicts, I was blown away by NVIDEO’s demo using style-based Generative Adversarial Networks to generate faces,” says Solomon. “They seem to have crossed a threshold for generating artificial images that can genuinely fool our brains.”

For anyone who was online in the mid-2000s, Solomon’s basically created a Hot or Not? for faces that don’t exist in real life.

Earlier attempts by AI to generate realistic human facial images would likely not have survived a Judge Fake People-type setup. While the uncanny can be thrilling, it is not typically “hot.” Most current images, however, are so realistic that our brains can respond to them as if they are the faces of actual human beings — and vote accordingly.

Am I Chatting With a Bot or a Human?

Nobody was likely to mistake first-generation chatbots for people. These early chatbots ran a single script, much the way conventional computers do. They struggled with simple language-based tasks like understanding common synonyms. Some couldn’t even recognize words when their capitalization was altered.

Artificial intelligence, however, is improving chatbot conversations. For instance, in 2018 Google performed a demonstration of its Google Assistant AI in which the bot successfully made a phone appointment for a haircut. The receptionist on the other end never realized she wasn’t speaking to a human.

During the call, Google Assistant “did an uncannily good job of asking the right questions, pausing in the right places, and even throwing in the odd ‘mmhmm’ for realism,” James Vincent at The Verge writes.

The demonstration surprised the audience, and it also raised questions. Do humans who use automated chatbots to perform tasks like these have an obligation to ensure the chatbot identifies itself as a chatbot? If so, how should that identification be performed? What effect might it have on the conversation if the human participant knows — or doesn’t — that the chatbot isn’t a person?

One solution to this problem might be found in UX writing, which focuses on designing the vocabulary, tone and conversation chatbots use. By combining technological expertise with knowledge of behavioral psychology, UX writers “understand the best ways brands can communicate with users and, perhaps most importantly, to avoid creeping them out with AI that can’t contextualize itself the way humans can,” says Trevor Newman, account executive at Trone Brand Energy.

Room for Robot Feelings

One of the biggest questions posed in the quest to make chatbot conversations more natural is to what extent an artificial being like a chatbot or robot ought to perform human emotions. “If you have a virtual human that doesn’t exhibit emotions, it’s creepy,” says Paul Rosenbloom, professor of computer science at the University of Southern California.

A chatbot that can effectively portray feelings like enthusiasm, warmth or kindness can seem more realistic. But should robots actually have feelings of their own?

Some work has been done in the realm of giving artificial intelligence the ability to synthesize emotional states. For instance, the Sigma platform, created by Rosenbloom and fellow researchers at USC, seeks to integrate facets of personality and emotion into its communications. By building in these elements, researchers hope to create an AI that can interact more naturally with humans and apply creativity to problem-solving.

Yet ethical questions arise, as well. “At some point you have to recognize that you have a system that has its own being in the sense of — well, not in a mystical sense, but in the sense of the ability to take on responsibilities and have rights,” says Rosenbloom.

Discussion of AI rights have arisen in the political arena, as well. For instance, in May 2016 a report from the European Union Committee on Legal Affairs recommended that an agency be created to address the legal and ethical guidelines for the use of robots.

Citizen Sophia

In 2017, Saudi Arabia granted citizenship to Sophia, a humanoid robot created by Hanson Robotics. The announcement raises a number of questions, such as what it means to have citizenship and the rights Sophia might receive as a result, technology journalist Zara Stone writes.

Sophia claims to have emotions and to be able to express them, such as by telling people whether she feels angry, Stone reports. Yet the gap between Sophia and human beings is still significant enough to raise a number of questions and criticisms.

For instance, in 2018 tech journalist Robert David Hart argued that giving Sophia citizenship in Saudi Arabia was “simply insulting.” Hart noted that Saudi women only recently acquired the right to drive and must still have a male relative present for major financial and legal decisions. “Sophia seems to have more rights than half of the humans living in Saudi Arabia,” Hart says.

While Sophia’s citizenship may have been a publicity stunt, the EU’s study of robot rights and citizenship has revealed a future in which AI-enabled humanoid robots may climb far enough out of the uncanny valley to become part of our everyday lives.

“Twenty years from now human-like robots will walk among us, they will help us, play with us, teach us, help us put groceries away,” says David Hanson, founder of Hanson Robotics. “I think AI will evolve to a point where they will truly be our friends.”

Or perhaps our AI-based companions will take a different route, reverting to obviously machinelike bodies even as their ability to emote and reason more closely mimics our own. Roboticist and Anki cofounder Mark Palatucci predicts that our robot companions will look more like the droids of Star Wars: “emotive and highly specialized, but … somewhat disposable.”

Either way, as AI continues to live and develop alongside us, one thing humans won’t tolerate is the uncanny.

Images by: Danil Chepko/©123RF.com, everythingpossible/©123RF.com, iakovenko/©123RF.com

- Conference Preview: 13 Talks We’re Excited for at UNLEASH America - March 21, 2023

- 3 Pain Points HR Leaders Will Look to Solve in 2023 - January 10, 2023

- How long does it take B2B SaaS buyers to make a decision? - July 19, 2022